The Practical Guide to Fail Fast: Transforming Rhetoric to Reality

Introduction: A Reality Check

Recently, I attended an event featuring an executive Q&A session. When asked about an upcoming departmental transformation, one leader confidently declared they were embracing a "fail fast" approach, "testing processes and finding out what works and what doesn't."

While I deeply value the experimental nature of applying a fail-fast methodology; when implemented with the right culture and mindset, its value is off the charts... something about this declaration felt hollow. It came across as empty rhetoric, a convenient slogan that meant: "We're not going to plan properly or do the work to make sure our first iteration is well-engineered. Instead, we'll use this philosophy as a ready-made excuse for any failures resulting from poor planning and execution."

This experience highlighted a troubling pattern I've observed where "fail fast" has become the corporate equivalent of a get-out-of-jail-free card, often cited to excuse poor execution rather than support intentional learning.

Failing Fast, Drowning Faster

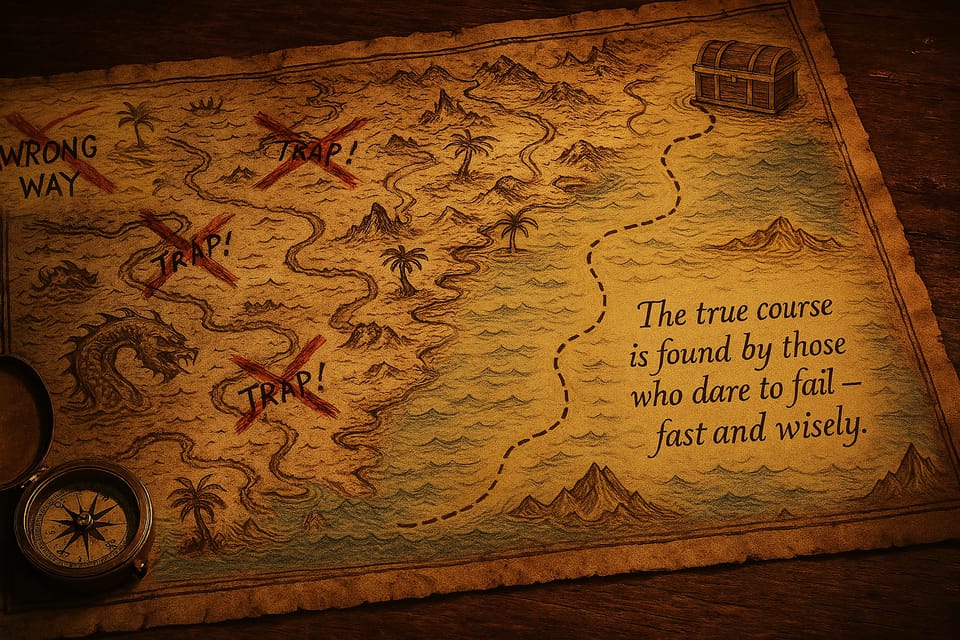

No competent Pirate Captain would launch a voyage during hurricane season without proper charts, provisions or a seaworthy vessel... yet in today's business environment, organisations frequently embark on high-stakes projects under the banner of "failing fast" with precisely this level of recklessness.

Consider the seasoned Pirate Captain's approach: they don't test their ship's seaworthiness by sailing straight into a hurricane. Instead, they make calculated decisions about when to take risks, gradually testing their vessel and crew in increasingly challenging but manageable conditions [the feedback loop here is key]. They understand the difference between a bold expedition and a foolhardy mission; and they know that even the most daring Pirates survive by respecting the power of predictable dangers.

The True Meaning of "Fail Fast"

At its core, "fail fast" is not about celebrating failure or embracing chaos. Rather, it's about:

- Accelerated learning cycles: Creating tight feedback loops that quickly validate or invalidate key assumptions.

- Contained experimentation: Testing critical hypotheses in controlled environments before significant resources are committed.

- Productive failure: Designing tests specifically to extract maximum learning value regardless of outcome.

- Intelligent risk management: Taking calculated risks where the potential for learning outweighs the cost of failure.

Organisations like SpaceX have mastered this approach; building rapid prototyping and testing into their development cycles while maintaining rigorous engineering standards and safety protocols.

Implementing "Fail Fast" with Intention: A Gradual Framework

Contrary to popular belief, "fail fast" doesn't mean "fail immediately on all fronts." The most effective implementation is gradual, systematic and increasingly ambitious as capability and confidence build. Like a Pirate Crew that starts with coastal raids before attempting open-ocean voyages, organisations should approach failure as a skill to be developed over time.

Phase 1: Preparation for Productive Failure

Identify Uncertainty Zones

- Map out areas of your project with the highest uncertainty and greatest potential impact.

- These become prime candidates for early testing and validation.

Design Learning Experiments

- Create specific, measurable hypotheses for each uncertainty; you may have to train your Crew on how to approach good hypothesis development.

- Design minimum viable tests that can validate or invalidate these hypotheses.

- Establish clear success criteria and data collection methods.

Build Failure Infrastructure

- Develop systems to capture, analyse and distribute learnings from failures.

- Create psychological safety that encourages honest reporting of failures; the culture of the team is vital to the long term success of experimentation.

- Allocate resources specifically for iteration after initial failures.

Phase 2: Controlled Execution

Start Small and Contained

- Begin with low-stakes experiments that test fundamental assumptions.

- Create "bulkheads" that prevent failures in one area from contaminating others.

- Establish clear boundaries for experimentation vs. production environments (see below reference 'Feature Flags' which enable controlled experimentation within production environments).

Implement Graduated Risk Protocols

- As concepts prove viable through small tests, gradually increase investment (time/budget/resources) and scope.

- Develop stage-gate processes that require specific validation before advancing.

- Maintain the option to pivot or terminate based on clear decision criteria.

Modern Tooling for Controlled Failure

Modern technology provides powerful tools that enable precise control over experimentation. These tools create what our Pirate Captain might call "compartmentalised risk" - the ability to test new approaches with specific Crews or ships without endangering the entire fleet.

Feature Flags: The Navigator's Selective Charts

Feature flags, like those provided by platforms such as LaunchDarkly’s feature flag platform, represent one of the most powerful mechanisms for controlled experimentation. These tools allow organisations to:

Implement Targeted Rollouts

- Release new features to specific user segments (e.g. internal users, beta testers, or 5% of customers; although it can be as low as 0.001% where 1 in 100,000 of users will experience the feature initially).

- Create sophisticated targeting rules based on user characteristics, geography, or usage patterns.

- Instantly disable problematic features without requiring code deployments.

Risk Reduction Strategy: Think of feature flags as allowing your ship to test a new sail design with just one small sail rather than replacing all sails at once; if the design fails, you simply lower that sail without compromising the vessel's overall capability.

Multi-Variant Testing: Parallel Expeditions

Advanced A/B and multivariate testing platforms enable organisations to:

Deploy Competing Hypotheses Simultaneously

- Test multiple versions of features against each other in real-time.

- Automatically allocate more traffic to better-performing variants.

- Generate statistically significant insights about user preferences and behaviours.

This approach replicates a Pirate fleet sending several small scouting parties along different potential routes, each reporting back on conditions before committing the main fleet to any single path.

Case Study: Project Pirate - A Voyage of Gradual Risk

In our extended "Project Pirate" metaphor, imagine transforming a conventional merchant vessel into an effective privateering operation, not by immediately attacking the Spanish Armada, but through deliberate, incremental steps:

Phase 1: Harbour Training (Low Risk Learning)

- Practice basic manoeuvres in sheltered waters.

- Test Crew coordination through simulated exercises.

- Identify and address skill gaps in a forgiving environment.

Phase 2: Coastal Expeditions (Contained Risk)

- Undertake short voyages in familiar territories.

- Test hypotheses about small tactical advantages.

- Practice recovery from minor setbacks.

Phase 3: Open Water Exploration (Managed Risk)

- Begin testing bolder hypotheses in less familiar territories.

- Deploy small, autonomous scouting vessels for higher-risk reconnaissance.

- Implement rapid feedback cycles to capitalise on new discoveries.

Phase 4: Strategic Privateering (Optimised Risk)

- Launch ambitious expeditions with high potential rewards.

- Apply accumulated learning to navigate complex challenges.

- Maintain strict discipline about seasonal hazards and known risks.

The key insight: Even the boldest Pirates don't start by attacking the Spanish treasure fleet! They build capabilities incrementally, taking increasingly ambitious but calculated risks based on accumulated learning. And they never - under any circumstances, deliberately sail into hurricane season unprepared!!

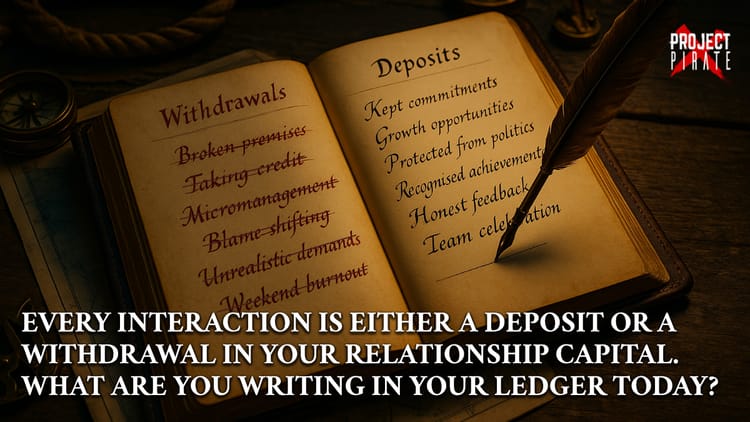

Preventing "Fail Fast" from Becoming Empty Rhetoric

When "fail fast" becomes a catchphrase rather than a methodology, it creates the organisational equivalent of a mutiny; undermining genuine innovation while creating unnecessary risk. Here are warning signs and preventive measures to ensure your approach remains authentic:

- Failure without learning infrastructure: If your organisation celebrates failure but has no systematic way to capture and apply lessons, you're simply failing.

- Reckless resource allocation: True "fail fast" approaches budget for multiple iterations and contain costs of individual failures.

- Failure amnesia: When the same failures occur repeatedly, your organisation is experiencing failure without learning.

- Post-hoc rationalisation: If "fail fast" is invoked primarily after unexpected failures rather than designed into projects from the beginning.

Countering the Rhetoric with Substance

To prevent "fail fast" from becoming mere rhetoric in your organisation:

- Document your hypothesis before testing: This requires teams to clearly articulate what they're testing and what would constitute success or failure before experiments begin. In more structured experiments, this includes outlining a null hypothesis (H₀) - what you'd expect if there's no effect - and an alternative hypothesis (H₁) representing the expected outcome.

- Create a "failure budget": Explicitly allocate resources for experimentation and learning, separate from operational budgets. This can be extremely helpful in quantifying a % of resource or costs on an ongoing basis.

- Build failure ceremonies: Establish structured processes for analysing failures, such as dedicated post-mortem meetings with standard protocols; other examples are 'After Action Report' (AAR) [sounds like Pirate talk!].

- Distinguish between productive and unproductive failure: Create clear criteria that separate valuable learning failures from execution mistakes or negligence.

Implementation Best Practices

To leverage these approaches effectively within a "fail fast" framework:

- Start with observability: Ensure robust monitoring and analytics are in place before experimenting with new features. There are so many options to choose from when applying in modern Cloud environments.

- Define precise kill criteria: Establish clear thresholds for automatically disabling experiments that show negative impacts.

- Layer your defences: Use multiple control mechanisms (e.g., feature flags + monitoring + automated rollbacks). Automatic rollbacks also can prevent Crew call outs in the middle of the night when thresholds are breached.

- Practice feature flag hygiene: Regularly audit and remove outdated flags to prevent technical debt. This can be done by applying good feature flag lifecycle policies.

- Create experiment playbooks: Standardise templates for different types of experiments to ensure consistent quality and risk management

Conclusion: Charting the Right Course

Implementing "fail fast" methodology requires more planning and discipline, not less. The most innovative organisations understand that productive failure is engineered, not accidental - it requires creating the conditions where teams can experiment safely, learn rapidly and apply those lessons systematically.

Like a seasoned Pirate Captain, the astute leader knows when to explore aggressively and when to navigate cautiously. They prepare thoroughly for both modes, understanding that even the most ambitious voyage requires proper charts, a seaworthy vessel and the wisdom to avoid sailing into hurricanes.

By implementing "fail fast" with intention rather than rhetoric, and by doing so gradually rather than haphazardly, organisations can harness its transformative power while avoiding the shoals of reckless execution that sink promising ventures. Heed the Captain's advice: 'the goal is not to fail fast - it's to learn fast', and sometimes controlled failure is simply the most efficient path to that learning.